Let me tell you a story in two parts.

The first is complex, but it marks the before and after (and explains why I'm still hooked on this dilemma).

The second is practical: how you can take advantage of it today.

How do you design a shopping system that works equally well on voice, screens, wearables and multiple languages without having to rebuild everything every time?

Instead of linear flows (search -> view product -> add to cart -> pay), I proposed something different: grouping moments in time.

No single paths. Multi-path.

But here's the technical part that had me obsessed:

Each "moment" was not a fixed component of the Design System. It was a group of information that auto-generated into a multimodal component on the fly.

Let me explain this because it's the core of everything:

#

#

In a traditional Design System you have:

* A button

* A product card

* A payment form

They are pre-designed and static components.

In a multimodal system, you have information groups that turn into components according to context:

Information group: "Confirmation of purchase of AA batteries"

Contains:

* Product name

* Price

* Saved payment method

* Shipping address

* Estimated delivery date

And this group self-renders as:

🔲 On Echo Show (visual + voice):

-> Card with product image

-> "1-Click Buy" button

-> Voice: "Should I confirm your order for Duracell batteries for €8.99?"

🗣️ On Echo Dot (voice only + light):

-> No visual

-> Voice: "Duracell AA batteries, €8.99, arriving tomorrow. Say 'confirm' or 'cancel'"

-> Pulsing blue LED ring (waiting for response) -> green (confirmed) -> red (cancelled)

👆 In mobile app (visual + touch):

-> Expandable card with full details

-> Large "Confirm purchase" button

-> Swipe up for more options

📱 On smartwatch (minimal notification):

-> "Your usual battery order - €8.99"

-> Two buttons: ✓ and ✗

-> Vibration on confirmation

🤖 In AI agent (API only):

-> No visual or voice

-> Consume endpoint:

-> Receive:

#

#

The same group of information generates 5 different components automatically.

You don't build 5 separate components.

You build a system that knows what to render based on the device.

The best part is that:

When the user changes device mid-flow, the component regenerates in the new context without losing state.

#

#

Suppose that not only the flow is resumed between devices, but the component reconfigures itself in real time according to the "information group" and the channel in which it appears.

* User is cooking and asks Alexa by voice: "Is there any oil left?"

* Alexa responds by voice: "There's little left. You can order your favorite."

* Alexa activates the "recurring order" component in voice-only mode, asking: "Should I order it for today?"

* User responds with a gesture (moving their hand close to Alexa):

* The system translates that gesture as "yes, order oil" because the light pattern and distance are configured for that action.

* Without human intervention, the system groups all relevant info (product, address, payment method, history) into a base object that can be interpreted in any context.

* The user, already out of the house, opens the Amazon mobile app:

* The system detects the channel change and instantly generates a touch component, with a "Cancel order" button, brand change options, and a summary notification.

* All state, options, and flow context are maintained, but the component is not the same as in Alexa: it automatically adapts to the touch and visual capabilities of the device.

The system understands that there is no "button to order oil", but the essence of the order, which can take shape as a voice phrase, onscreen option, wearable vibration, or an AI agent API request.

Thus each "moment" of the experience is multimodal and adaptable, not just cross-device.

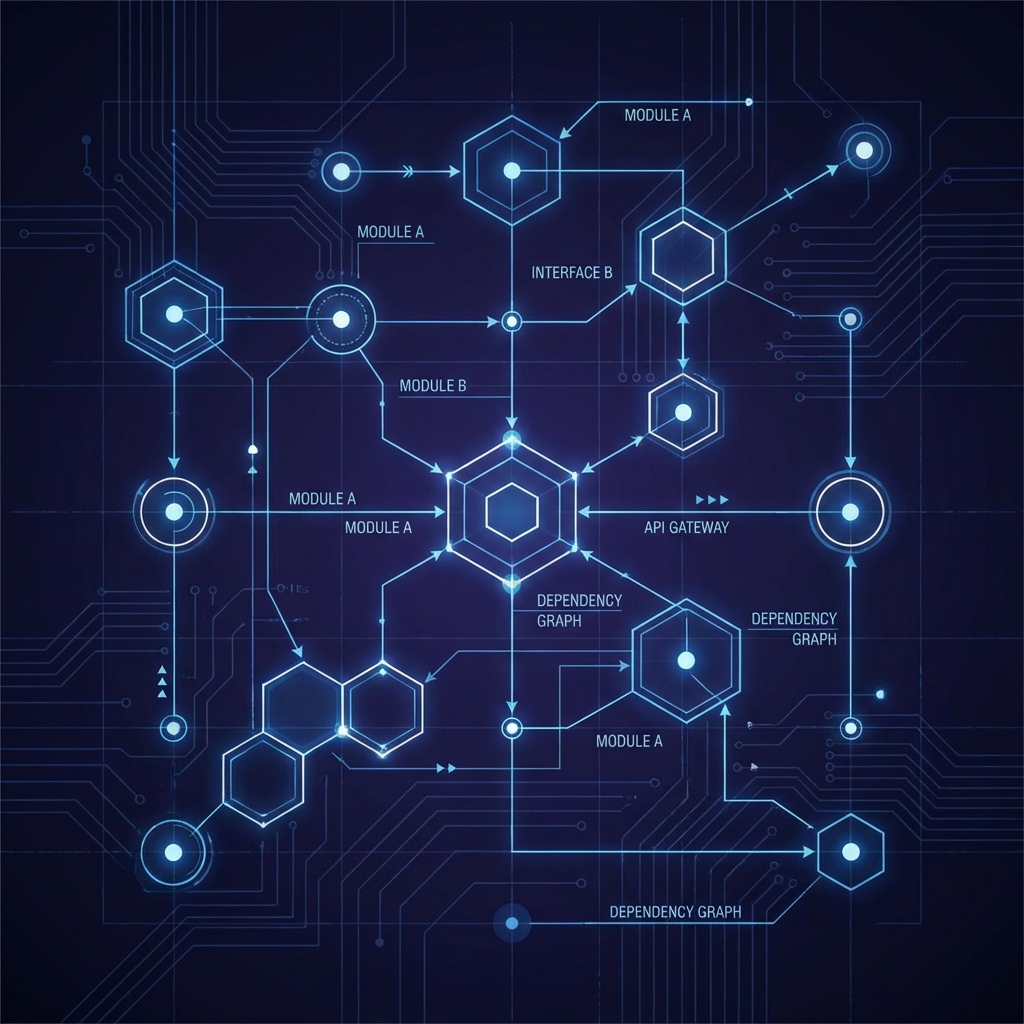

And this was possible because each multimodal component had these layers:

🔲 Visual layer: 2D components of traditional DS (cards, buttons, forms)

🗣️ Conversational layer: voice text fragments

👆 Input layer: interaction rules (touch, voice, gestures, distance sensors)

🔌 Data layer: API with the information group

🧭 Navigation layer: transition rules (where you come from, where you're going)

Depending on the device, the system activated the necessary layers and generated the component on the fly.

In 2022, this sounded abstract.

Because that doesn't scale when you have 15 different Alexa device types, plus mobile app, plus web, plus wearables.

In 2025, with AI agents buying alone...

AI agents are now buying by themselves.

You ask an assistant: "Hire 10 creators for my campaign".

The agent searches, compares, pays the $50 with your prior permission, and delivers results.

No app. No checkout. No forms.

The AI agent is just another "device", even if it acts without visual or voice. Just business logic.

#

#

#

This is not science fiction. The numbers are clear:

📊 $1.7 trillion in AI-driven commerce projected for 2030 (Edgar Dunn & Co.)

📊 25% of online sales will be performed by AI agents by then (PayPal CEO)

📊 1M+ merchants on Shopify already enabled to sell through conversational agents

📊 58% of financial institutions attribute revenue growth to IA (McKinsey)

In Alexa Shopping in 2022, this was an experiment.

#

#

Stripe exemplifies it perfectly:

A single API and context, infinite uses.

Not manual integrations but rules, tokens and components that adapt to each channel.

If you need a new component for each context, you fall behind.

If you define the information groups well, the system self-generates and scales alone.

How to start building for this future (without rewriting everything)

The good news: you don't need to throw away your current system.

#

#

Instead of designing "a product card", ask yourself:

What critical information do I need to show at this moment?

(Name, price, availability, payment method...)

Then define how that information renders according to context:

* Visual: card + button

* Voice: phrase + confirmation

* API: JSON with the same data

* Wearable: minimal notification

#

#

Your "information group" should live in an independent data layer.

The presentation layer decides how to show it according to the device.

#

#

The flow state should not live in the visual component.

It should live in a navigation layer that persists between devices.

Example: user starts on voice, continues in app, ends in web.

Same flow. Multiple contexts.

#

#

Instead of manually creating variants for each context, define rules:

"If device = Alexa Dot -> voice only"

"If device = Echo Show -> voice + visual"

"If device = AI agent -> API only"

#

#

Each auto-generated component must record:

* What information did it contain?

* In what device was it rendered?

* What layers were activated?

* How did the user respond?

All auditable. Always.

Why I'm so excited about this

3 years ago, at Alexa Shopping, I started exploring multimodal components for purchases. I didn't even know if anyone would understand why, but I was determined to take the Design System to its next level.

Today I see AI agents buying in milliseconds, jumping between contexts, managing permissions...

And I think: this is exactly what we were trying to resolve.

The difference is that then there was no massive demand.

Now, with $1.7 trillion projected in agentic commerce for 2030...

It's no longer an experiment.

But for now, the question is simple:

To what extent do your components adapt or just copy themselves to each channel?

Because that's the difference between scaling and having to create a new variant every time something changes.

💬 I'd love to read your cases, challenges or doubts. Have you ever managed to have the same flow live in a thousand contexts without redoing components?"

Leave it in comments. I want to know what other teams are exploring.

#DesignSystems #ProductLeadership #APIs #IA #AgenticCommerce #Multimodal #AlexaShopping

The first is complex, but it marks the before and after (and explains why I'm still hooked on this dilemma).

The second is practical: how you can take advantage of it today.

PART 1: Alexa Shopping and the dilemma of layers

When I worked at Amazon Alexa Shopping, months before the ChatGPT hype, I became obsessed with one question:How do you design a shopping system that works equally well on voice, screens, wearables and multiple languages without having to rebuild everything every time?

I spent 6 months thinking about this. There were no references, gurus or literature on "Multimodal Design Systems applied to shopping".

Instead of linear flows (search -> view product -> add to cart -> pay), I proposed something different: grouping moments in time.

No single paths. Multi-path.

But here's the technical part that had me obsessed:

Each "moment" was not a fixed component of the Design System. It was a group of information that auto-generated into a multimodal component on the fly.

Let me explain this because it's the core of everything:

#

#

The auto-generated multimodal component

In a traditional Design System you have:

* A button

* A product card

* A payment form

They are pre-designed and static components.

In a multimodal system, you have information groups that turn into components according to context:

Information group: "Confirmation of purchase of AA batteries"

Contains:

* Product name

* Price

* Saved payment method

* Shipping address

* Estimated delivery date

And this group self-renders as:

🔲 On Echo Show (visual + voice):

-> Card with product image

-> "1-Click Buy" button

-> Voice: "Should I confirm your order for Duracell batteries for €8.99?"

🗣️ On Echo Dot (voice only + light):

-> No visual

-> Voice: "Duracell AA batteries, €8.99, arriving tomorrow. Say 'confirm' or 'cancel'"

-> Pulsing blue LED ring (waiting for response) -> green (confirmed) -> red (cancelled)

👆 In mobile app (visual + touch):

-> Expandable card with full details

-> Large "Confirm purchase" button

-> Swipe up for more options

📱 On smartwatch (minimal notification):

-> "Your usual battery order - €8.99"

-> Two buttons: ✓ and ✗

-> Vibration on confirmation

🤖 In AI agent (API only):

-> No visual or voice

-> Consume endpoint:

POST /orders/confirm-> Receive:

{orderId, status, estimatedDelivery}#

#

And here's the magic:

The same group of information generates 5 different components automatically.

You don't build 5 separate components.

You build a system that knows what to render based on the device.

The best part is that:

When the user changes device mid-flow, the component regenerates in the new context without losing state.

#

#

Real example (beyond typical cross-device):

Suppose that not only the flow is resumed between devices, but the component reconfigures itself in real time according to the "information group" and the channel in which it appears.

* User is cooking and asks Alexa by voice: "Is there any oil left?"

* Alexa responds by voice: "There's little left. You can order your favorite."

* Alexa activates the "recurring order" component in voice-only mode, asking: "Should I order it for today?"

* User responds with a gesture (moving their hand close to Alexa):

* The system translates that gesture as "yes, order oil" because the light pattern and distance are configured for that action.

* Without human intervention, the system groups all relevant info (product, address, payment method, history) into a base object that can be interpreted in any context.

* The user, already out of the house, opens the Amazon mobile app:

* The system detects the channel change and instantly generates a touch component, with a "Cancel order" button, brand change options, and a summary notification.

* All state, options, and flow context are maintained, but the component is not the same as in Alexa: it automatically adapts to the touch and visual capabilities of the device.

The system understands that there is no "button to order oil", but the essence of the order, which can take shape as a voice phrase, onscreen option, wearable vibration, or an AI agent API request.

Thus each "moment" of the experience is multimodal and adaptable, not just cross-device.

Same flow. Multiple devices. One single system.

And this was possible because each multimodal component had these layers:

🔲 Visual layer: 2D components of traditional DS (cards, buttons, forms)

🗣️ Conversational layer: voice text fragments

👆 Input layer: interaction rules (touch, voice, gestures, distance sensors)

🔌 Data layer: API with the information group

🧭 Navigation layer: transition rules (where you come from, where you're going)

Depending on the device, the system activated the necessary layers and generated the component on the fly.

In 2022, this sounded abstract.

Because that doesn't scale when you have 15 different Alexa device types, plus mobile app, plus web, plus wearables.

In 2025, with AI agents buying alone...

PART 2: Why this matters and how to take advantage of it now

What changed this week:AI agents are now buying by themselves.

You ask an assistant: "Hire 10 creators for my campaign".

The agent searches, compares, pays the $50 with your prior permission, and delivers results.

No app. No checkout. No forms.

The AI agent is just another "device", even if it acts without visual or voice. Just business logic.

#

#

#

The data that backs it up

This is not science fiction. The numbers are clear:

📊 $1.7 trillion in AI-driven commerce projected for 2030 (Edgar Dunn & Co.)

📊 25% of online sales will be performed by AI agents by then (PayPal CEO)

📊 1M+ merchants on Shopify already enabled to sell through conversational agents

📊 58% of financial institutions attribute revenue growth to IA (McKinsey)

In Alexa Shopping in 2022, this was an experiment.

In 2025, with agents buying alone, it's the next wave.

#

#

The case that makes it tangible: when Stripe understood the game

Stripe exemplifies it perfectly:

A single API and context, infinite uses.

Not manual integrations but rules, tokens and components that adapt to each channel.

If you need a new component for each context, you fall behind.

If you define the information groups well, the system self-generates and scales alone.

How to start building for this future (without rewriting everything)

The good news: you don't need to throw away your current system.

How to start?

You can start applying these principles step by step:#

#

1. Stop thinking about fixed components. Start thinking about information groups.

Instead of designing "a product card", ask yourself:

What critical information do I need to show at this moment?

(Name, price, availability, payment method...)

Then define how that information renders according to context:

* Visual: card + button

* Voice: phrase + confirmation

* API: JSON with the same data

* Wearable: minimal notification

#

#

2. Separate data from presentation.

Your "information group" should live in an independent data layer.

The presentation layer decides how to show it according to the device.

#

#

3. Make your components capable of continuing across multiple devices.

The flow state should not live in the visual component.

It should live in a navigation layer that persists between devices.

Example: user starts on voice, continues in app, ends in web.

Same flow. Multiple contexts.

#

#

4. Turn manual processes into automatic rendering rules.

Instead of manually creating variants for each context, define rules:

"If device = Alexa Dot -> voice only"

"If device = Echo Show -> voice + visual"

"If device = AI agent -> API only"

#

#

5. Make traceability automatic, not optional.

Each auto-generated component must record:

* What information did it contain?

* In what device was it rendered?

* What layers were activated?

* How did the user respond?

All auditable. Always.

Why I'm so excited about this

3 years ago, at Alexa Shopping, I started exploring multimodal components for purchases. I didn't even know if anyone would understand why, but I was determined to take the Design System to its next level.

Today I see AI agents buying in milliseconds, jumping between contexts, managing permissions...

And I think: this is exactly what we were trying to resolve.

The difference is that then there was no massive demand.

Now, with $1.7 trillion projected in agentic commerce for 2030...

It's no longer an experiment.

But for now, the question is simple:

To what extent do your components adapt or just copy themselves to each channel?

Because that's the difference between scaling and having to create a new variant every time something changes.

💬 I'd love to read your cases, challenges or doubts. Have you ever managed to have the same flow live in a thousand contexts without redoing components?"

Leave it in comments. I want to know what other teams are exploring.

#DesignSystems #ProductLeadership #APIs #IA #AgenticCommerce #Multimodal #AlexaShopping